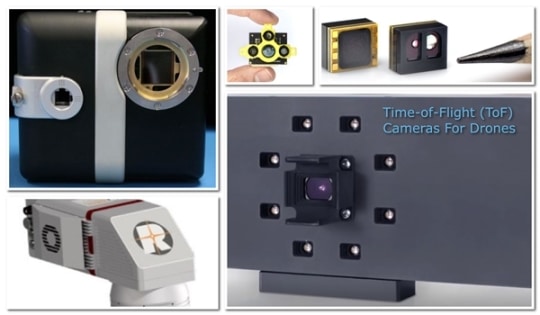

A flash lidar also known as a ToF camera sensor on a drone or ground based system has numerous powerful uses.

These flash lidar Time-of-Flight camera sensors can be used for object scanning, measure distance, indoor navigation, obstacle avoidance, gesture recognition, tracking objects, measuring volumes, reactive altimeters, 3D photography, augmented reality games and much more.

This article explains what flash lidar Time of Flight 3D depth ranging camera sensor technology is, how it works and which sectors are benefiting from it.

Also, we look at the various flash lidar ToF sensor manufacturers and full solution ToF providers. There is plenty of additional information below on all the advantages and benefits to using flash lidar ToF cameras across loads of sectors.

Drones are making a huge impact in multiple sectors and you can read more in this article on the best uses for drones. The real benefits of drones is when the various sensors are mounted to create new solutions.

The information provided here which includes ToF videos covers both ground based and aerial based ToF.

What is Flash Lidar ToF Camera Technology – Answered

Time-of-Flight flash lidar 3D camera sensor technology is still relatively young so there is still a huge amount of innovation to come.

Time of Flight (ToF) is a highly accurate distance mapping and 3D imaging technology. Time-of-Flight 3D depth sensors emit a very short infrared light pulse and each pixel of the camera sensor measures the return time.

Flash lidar Time-of-Flight cameras have a huge advantage over other technologies, as it is able to measure the distances within a complete scene in a single shot.

ToF is one of a few techniques known as Range Imaging. The sensor device, which is used for producing the range image is sometimes referred to as a range camera. Other range techniques are Stereo triangulation, Sheet of light triangulation, Structured light, Interferometry and Coded aperture.

Range imaging Time-of-Flight cameras are highly advanced LiDar systems, which replace the standard point by point laser beams with a single light pulse or flash to achieve full spatial awareness.

The camera can sense the time which it takes light to return from any surrounding objects, combine it with video data and create real time 3D images.

This can be used to track facial or hand movements, map out a room, remove background from an image or even overlay 3D objects in an image. Plus much more as you will see below.

Flash Lidar Time of Flight Sensor Technology Principle

Time-of-Flight (ToF) is a method for measuring the distance between a sensor and an object, based on the time difference between the emission of a signal and its return to the sensor, after being reflected by an object.

ToF flash lidar is scannerless, meaning that the entire scene is captured with a single light pulse (flash), as opposed to point-by-point with a rotating laser beam. Time-of-flight cameras capture a whole scene in three dimensions with a dedicated image sensor, and therefore has no need for moving parts.

How Flash Lidar ToF Camera Sensors Work

A 3D Time-of-Flight laser radar with a fast gating intensified CCD camera achieves sub-millimeter depth resolution. With this technique a short laser pulse illuminates a scene, and the intensified CCD camera opens its high speed shutter only for a few hundred picoseconds.

The 3D ToF information is calculated from the 2D image series which was gathered with increasing delay between the laser pulse and the shutter opening.

Here is a an excellent video which explains very easily what is Time-of-Flight technology.

Time of Flight LiDAR or Flash LiDAR Versus Lidar

Time-of-Flight cameras are also referred to as Flash LiDAR or Time-of-Flight LiDAR. There is quite a difference in technology between Time-of-Flight flash lidar and lidar. To explain all the differences, here is an excellent video called “LiDAR and Time-of-Flight sensing“. This video also explains about computer vision for visual effects.

You can also read our post on Lidar sensors entitled “12 Top Lidar Sensors For UAVs And Terrific Uses“.

New Solutions With 3D ToF Cameras

This new 3D Time-Of-Flight sensor technology delivers accurate depth information at high frame rates in a low cost solid state camera. The additional third dimension is taking standard image processing and image understanding to a whole new level. It is opening up multiple new techniques in industrial inspection, automation and logistics as well as medical applications including human computer interaction.

Gesture & Non-Gesture ToF Applications

Generally speaking, 3D Time of Flight uses can be categorized into Gesture and Non-Gesture. The Gesture applications emphasize human interactions and speed; while non-gesture applications emphasize measurement accuracy.

Gesture applications translate human movements (faces, hands, fingers or whole-body) into symbolic directives to command gaming consoles, smart televisions, or portable computing devices.

For example, channel surfing can be accomplished by waving of hands and presentations can be scrolled by using finger flickering. These applications usually require fast response time, low- to medium-range, centimeter-level accuracy and power consumption. The Microsoft Kinect 2 uses Time-of-Flight sensors for gesture interactions.

ToF 3D depth sensors have uses in non-gesture applications, which are highlight further down this article. The automotive industry is more advanced than the drone industry in using ToF depth ranging cameras.

For instance, in automotive, a ToF camera can increase safety by alerting the driver when it detects people and objects in the vicinity of the car and in computer assisted driving. In robotics and automation, ToF sensors can help detect product defects and enforce safety envelopes required for humans and robots to work in close proximity.

Some of the non gesture ToF applications are scanning for objects, indoor navigation, outdoor navigation, obstacle detection, collision avoidance, tracking objects, volumetric measurements, reactive altimeters, 3D images photography, augmented reality games and much more.

Flash Lidar Time of Flight Signal Carriers

Various types of signals (also called carriers) are used with ToF, with sound and light being the most common.

Time of Flight Light Carrier

Using light sensors as a carrier is common, because it is able to combine speed, range, low weight and eye-safety. Infrared light ensures less signal disturbance and easier distinction from natural ambient light, resulting in very high performing sensors for their given size and weight.

Time of Flight Sound Carrier

Ultrasonic sensors are used for determining the proximity of objects (reflectors) while the robot or UAV is navigating. For this task, the most conventional implementation is the Time-of-Flight sensor, which calculates the distance of the nearest reflector using the speed of sound in air and the emitted pulse and echo arrival times.

Time of Flight Sensor Flash Lidar Advantages

As an emerging technology, Time-of-Flight or 3D Flash LIDAR has a number of advantages over conventional point (single pixel) scanner cameras and stereoscopic cameras, including:

As an emerging technology, Time-of-Flight or 3D Flash LIDAR has a number of advantages over conventional point (single pixel) scanner cameras and stereoscopic cameras, including:

Simplicity

In contrast to stereo vision or triangulation systems, the whole system is very compact: the illumination is placed just next to the lens, whereas the other systems need a certain minimum base line.

In contrast to laser scanning systems, no mechanical moving parts are needed.

A great advantage of a Time-of-Flight camera is being able to compose a 3D image of a scene in just one shot. Other 3D vision systems require more images and movement.

Efficient

It is a direct process to extract the distance information out of the output signals of the ToF sensor. As a result, this task uses only a small amount of processing power whereas with stereo vision, complex correlation algorithms are implemented requiring much more processing power using more energy.

After the distance data has been extracted, object detection is also a straightforward process to carry out because the algorithms are not disturbed by patterns on the object.

Speed

Time-of-flight 3D cameras are able to measure the distances within a complete scene with a single shot. As the cameras reach up to 160 frames per second, they are ideally suited to be used in real-time applications.

Price

In comparison to other 3D depth range scanning technology such as structured light camera/projector systems or laser range finders, ToF technology is quite cheap.

ToF Flash Lidar Technological Advantages

- Lightweight

- Full frame time-of-flight data (3D image) collected with a single laser pulse

- Unambiguous direct calculation of range

- Blur-free images without motion distortion

- Co-registration of range and intensity for each pixel

- Pixels are perfectly registered within a frame

- Ability to represent the objects in the scene that are oblique to the camera

- No need for precision scanning mechanisms

- Combine 3D Flash LIDAR with 2D cameras (EO and IR) for 2D texture over 3D depth

- Possible to combine multiple 3D Flash LIDAR cameras to make a full volumetric 3D scene

- Smaller and lighter than point scanning systems

- No moving parts

- Low power consumption

- Ability to “see” into obscurants known as range-gating (Fog, Smoke, Mist, Haze, Rain)

ToF Flash Lidar Camera Price

What is really terrific about Time-of-Flight Infrared 3D camera sensors is that they are pretty reasonable. Generally prices for ToF camera sensors can start at around the USD 150 for a ToF camera such as the TeraRanger One mentioned below up.

At the same time, ToF camera prices can go all the way up to thousands of dollars. ToF sensors have a wide variety of features, specifications along with applications, therefore it is hard to compare one against another.

3D ToF Flash Lidar Camera Sensor Manufacturers

AMS / Heptagon: has a few Time-of-Flight flash lidar sensors for a wide variety of solutions. The Heptagon Laura And Lima Time-of-Flight 3D Sensors are used on drones and robots.

Heptagon was acquired by AMS in January 2017. Heptagon had over 20 years in the ToF flash lidar sector and own the whole ToF technology stack including ToF pixel design, analog and mixed signal design, ASIC development and embedded software design.

The first ToF concepts were invented and demonstrated at CSEM, from which Mesa Imaging was spun off. The technology was further developed and industrialized in the “SwissRanger” series (no longer in production) – the benchmark for industrial 3D cameras.

During this time a strong patent portfolio in ToF pixels and 3D measurement concepts was built up.

In 2014 Heptagon acquired Mesa. This combined Mesa’s top class “phase-modulation ToF” pixels with Heptagon’s unique packaging and optics technologies and mass production know-how.

ASC TigerCub: This 3D Flash liDAR with Zephyr Laser Camera from ASC is a small form-factor integrated 3D camera. It is capable of capturing a full array of 128 x 128 of independently triggered pixels per each frame. This allows 16,300 3D individual range and intensity points to be generated up to 20 frames per second as 3D point cloud images or video streams per laser pulse (frame) in real-time.

The TigerCub flash lidar ToF camera weights in at 1.4 kg (3 lbs) so you do need a fairly powerful drone to lift this sensor. ASC’s 3D focal plane array and laser technologies have been tested and used in a wide range of applications. The on-board processing in all ASC 3D cameras allows for streaming 3D point cloud and intensity output as well as camera telemetry.

The 3D data output is used to provide autonomous (e.g. rendezvous, proximity, landing, etc.) operations.

TeraRanger One – This flash lidar ToF sensor weights only 8 grams and has a price of USD $150 upwards. It is ideal for drone and robotic operations. TeraRanger has a number of T0F depth sensor products and they are extremely popular. The TeraRanger One ToF camera has a wide variety such as;

- TeraRanger One aids vision-based drone intelligence

- Detecting glass surfaces with infrared Time-of-Flight

- TeraRanger One helps ensure vineyard health with drone-based solution

- Plug and Play sensor arrays

- Drone automatic re-initialization and failure recovery system

- Drone flies in the forest with collision avoidance

Now, here is a nice introduction to the TeraRanger One ToF camera.

Riegl – Provide ToF, LiDAR and various other 3D cameras and scanners with a wide array of performance characteristics and applications. Their sensors work across multiple platforms such as unmanned, airborne, mobile, and terrestrial 3D ToF and laser solutions.

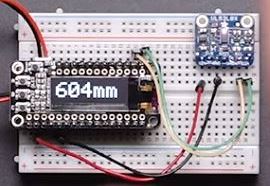

AdaFruit – Founded in 2005 by MIT hacker & engineer, Limor “Ladyada” Fried. Her goal was to create the best place online for learning electronics and making the best designed electronic products for makers of all ages and skill levels. Adafruit has grown to over 100+ employees in the heart of NYC with a 50,000+ square feet factory.

The AdaFruit VL53L0X ToF sensor contains a very tiny invisible laser source and a matching sensor. The VL53L0X can detect the “time of flight” or how long the light has taken to bounce back to the sensor. Since it uses a very narrow light source, it is good for determining distance of only the surface directly in front of it.

Unlike sonars that bounce ultrasonic waves, the ‘cone’ of sensing is very narrow. Unlike IR distance sensors, which try to measure the amount of light bounced, the VL53L0x is much more precise and doesn’t have linearity problems or ‘double imaging’ where you can’t tell if an object is very far or very close.

This VL53LoX is the ‘big sister’ of the VL6180X ToF flash lidar sensor and can handle about 50 mm to 1200 mm of range distance. If you need a smaller/closer range, check out the VL6180X which can measure 5 mm to 200 mm and also contains a light sensor.

Combining Flash Lidar ToF Sensors With Other Sensors To Create Solutions

Many technology companies combine ToF cameras with other sensors, software and algorithms to provide a comprehensive solution.

For example, a drone might combine Time-of-Flight, LiDAR, Stereo Vision, and Ultrasonic sensors for its autonomous flight modes and collision avoidance system.

Another example would be products like the Microsoft Kinect 2 technology in the XBox One or Gesture Modes on drones for taking selfies. These combine technologies such as computer vision, signal processing, and machine learning, along Time-of-Flight technology in the creation of the product or feature within the product.

In agriculture drones equipped with Multispectral sensors which may also include ToF sensors to monitor the health of crops. More on this later.

In collision avoidance drones, ToF sensors are being used along with other sensors such as Vision or LiDAR to avoid obstacles. The ToF sensor can work as a Reactive Altimeter. More on this at the further down this article.

Here is another series of terrific articles about drones using various sensors across multiple sectors.

Flash Lidar ToF Cameras, Local or Cloud Storage And Software Solution

Mounting a Time-of-Flight camera sensor onto a drone and taking images of scenes won’t do anything on its own. A full ToF solution is required. The ToF depth sensor is mounted onto the drone. Next the fly over the target or object takes place while the ToF sensor camera is rolling.

The image can be viewed in real time. However, it can also be moved and stored. Then using specialized software, the 3D image, map or film is generated where it can be analysed and interpreted into meaningful information which can be acted upon.

Full Flash Lidar ToF Solution

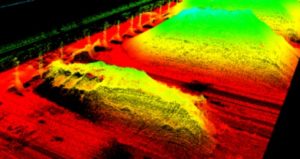

Here’s an example of a full solution by Kespry for measuring stockpiles (stone, gravel, rock, pulp, paper, logs, wood chip, mulch and manure etc)

You fly the Kespry 2 drone over the stockpile. While flying over the stockpile, the ToF camera sensor on board are able to capture the whole stockpile in three dimensions with a single light pulse. This data is automatically uploaded into their cloud storage.

Next, data processing takes place, which allow for the aggregate measurement of the stockpile in less than one minute, including the perimeter, area and volume for each stockpile. Further and fuller detailed information can also be generated from 1 to 6 hours. Whether you’re measuring sand stockpiles, rock stockpiles or wood stockpiles, all it takes is a few clicks.

In addition, stockpile density and cost factors can be entered to calculate stockpile weight and value which is ideal for stockpile inventory reports. Even odd-shaped stockpiles against walls can be accurately measured with this ToF solution.

What Can Flash Lidar ToF Camera Sensors On Drones Be Used For – Answered

The main uses for Time-of-Flights cameras are in the following technologies;

- Indoor navigation

- Gesture recognition

- Object scanning

- Collision avoidance

- Track objects

- Measure volumes

- Surveillance of a target zone

- Count objects or people

- Fast precise distance-to-target readings

- Augmented reality / virtual reality

- Estimate size and shape of objects

- Enhanced 3D photography

This ToF technology is being used by more than just the drone sector. It is being applied in various fields such as;

- Logistics/warehouses

- Farm automation

- Surveillance & security

- Robotics

- Medicine

- Gaming

- Photography

- Film making

- Automotive

- Archaeology

- Environment projects

Flash Lidar ToF 3D Augmented Reality Drones

Augmented reality (AR) is a live direct or indirect view of a physical, real-world environment whose elements are augmented (or supplemented) by computer-generated sensory input such as sound, video, graphics or GPS data.

ToF Augmented Reality Drone Games

The Walkera Aibao drone along with its software app allow you to play a virtual reality flight game in the real world. This is the 1st drone which allows the real world intertwine with the virtual world.

You can take this drone out into an open space and play virtual reality flight and fight games in the space. The games give you Racing, Combat and Collection mode. Here is a short Aibao Game drone video. It sure is fun.

Flash Lidar ToF Cameras For Drone Indoor Navigation

For a drone or even a robot to navigate successfully and safely indoors provides a number of technical challenges. In robotic mapping, simultaneous localization and mapping (SLAM) is the computational problem of constructing or updating a map of an unknown environment while simultaneously keeping track of an agent’s location within it.

Or to rephrase this in a more simple way. SLAM is concerned with the problem of building a map of an unknown environment by a mobile robot while at the same time navigating the environment using the map.

Here is an example of a drone using a Time-of-Flight depth sensing camera can navigate indoors.

The Parrot ARDrone uses a downward facing camera to fly indoors without GPS. The basic mechanism used is that two photos are taken of the ground below the vehicle. The second photo is then compared to the first and an offset is calculated. This offset indicates how much the vehicle moved between the two photos.

This technique, combined with a Time-of-Flight 3D depth sensor pointed downwards provides a very accurate and low cost mechanism for indoor localization in both horizontal and vertical space.

You can read more on this subject on the following articles;

Indoor Autonomous Flight Using Pixhawk

SLAM + AI = A Smart Autonomous 3D Mapping Drone

Flash Lidar ToF Cameras For 3D Shape Scanning

Drones are presently been used to create accurate 3D model of structures and monuments. In 2015, a drone successfully created a 3D image of the Christ the Redeemer statue in Brazil. Before this, there had been no accurate models of this statue anywhere.

There is a huge market just in the surveying and the creation of 100% accurate models or ancient monuments and heritage sites.

Now the mapping of the Christ the Redeemer statue used 3D photogrammetry, which meant the drone had to fly around the statue taking thousands of pictures which are then stitched together using 3D mapping software.

Other 3D shape scanning technology use specialized and complex sensors, such as structured light camera/projector systems or laser range finders. Even though these produce data of high quality, they are quite expensive and often require expert knowledge for their operation.

Harvard University have come up with a very cost effective 3D shape scanning solution using a Time-of-Flight Camera. Now this solution was ground based. However, this solution would also work when mounted on a drone.

With more innovation and investment dollars in this area, we could well see ToF cameras becoming the cost effective solution to scanning large objects and monuments.

You can read more on the Harvard University project entitled 3D Shape Scanning with a Time-of-Flight Camera.

Drones With Flash Lidar ToF Cameras In Agriculture

In agriculture, drones with multispectral sensors allow the farmer to manage crops and soil more effectively. These multispectral imaging agriculture drones use remote sensing technology in the Green, Red, Red-Edge and Near Infrared wavebands to capture both visible and invisible images of crops and vegetation.

The data from multispectral sensors is very useful in the prevention of disease, and infestation. They also assist the farmer greatly in calculating the correct amount of water, fertilizers and sprays to use on crops.

ToF cameras can be used as part of an agricultural multispectral solution in an number of ways.

Time-of-Flight cameras are excellent at measuring volumes very quickly. They can capture a complete scene in a single shot. ToF 3D sensors are ideal for measuring crop density and giving volume data on mulch and manure stockpiles.

To get accurate data of crops, the more stable the drone flies, the more accurate the data, images and film will be. Smoother flight improves the data acquisition process and also contributes to longer flight autonomy.

ToF sensors such as the TeraRanger One can be used as a very precise reactive altimeter. Compared to laser-based systems with a very narrow field of view, the TeraRanger ToF camera provides a much smoother and more stable relative altitude as the drone alternates between flight over the ground and over the vines.

Here is a nice video which shows how a company called Chouette provide a full agricultural multispectral solution to Vineyards in France. Their drone uses a ToF TeraRanger One ToF camera to act as a precision altimeter for more stable flight and better data acquisition.

Flash Lidar ToF Depth Imaging Camera To Measure Volumes

Flash lidar ToF cameras are being used to measure volumes such as packaging space in boxes in factory or warehousing environments. Outdoors, drones or cranes mounted with ToF cameras are able to measure the volume of stockpiles or truck loads filled with raw material.

Flash lidar ToF cameras are being used to measure volumes such as packaging space in boxes in factory or warehousing environments. Outdoors, drones or cranes mounted with ToF cameras are able to measure the volume of stockpiles or truck loads filled with raw material.

A drone with a ToF mounted camera can fly over all or a part of a storage area and quickly calculate the amount of material deposited or the aggregate load the truck is carrying.

Learn how Whitaker Contracting saving 22% per year in costs, in measuring their stockpiles using drones with ToF cameras. They are making big savings while also measuring their stockpiles 2 times more frequently. The amount of time spent in measuring the stockpiles is now 75% less than what it used to take.

Here are another few interesting articles on how ToF depth sensors are able to quantify and measure volumes.

Time-of-flight (TOF) camera measures box volume

Use Of Time-of-Flight 3D Camera In Volume Measurement

Time-of-Flight Flash Lidar Camera Sensor To Measure Distance

Time of Flight sensors provide rapid measurement of distance between the ToF sensor and an object, based on the time difference between the emission of a signal and its return to the sensor, after being reflected by an object.

This next video shows you a very simple and cost effective way to measure distance using the CJVL53LOXV2 ToF Distance Sensor for Arduino. With the help of the Adafruit library, this ToF sensor is very easy to use. The ToF board is also very small making it an ideal for drones.

Photography Drones With ToF 3D Depth Cameras

3D is becoming very popular in TV and movies. Professional photographers, film makers and consumers are looking for cameras which can generate 3D content whether video or stills. This 3D technology is based on time-of-flight sensors.

Unlike a conventional camera, the ToF camera delivers not only a light-intensity image but also a range map which contains a distance measurement at each pixel, obtained by measuring the time required by light to reach the object and return to the camera (time-of-flight principle).

You can find much more information on 3D cameras over on DPReview.

The cameras in drones have improved immensely over the past couple of years. Last year, a number of drones with 4k cameras came on the market. There were also 2 drones with zoom cameras. They were the Walkera Voyager and the Zenmuse Z3 camera for the DJI Inspire 1 drone.

So at some point in the not too distant future, we can expect to see 3D photography cameras on most drones.

Flash Lidar Time-of-Flight Warehousing Drones

Over the past few months, there have been a number of articles covering companies which are providing drones and solutions for warehousing. Here is an article regarding drones using RFID technology to count pallets and inventory in warehouses. ToF cameras can be used for object scanning and volume counting.

Another article covers the use of ToF flash lidar cameras on the Eyesee drone from the Hardis Group. The Eyesee drone is equipped with an on-board camera and indoor geolocation technology which allows it to move using a predetermined flight plan and to capture relevant data on the pallets stored in the warehouse.

The drone then associates the captured image with its position in the warehouse and automatically translates its 3D position into a logistics address (storage location).

Gesture Recognition ToF On Drones

The Microsoft Kinect 2 uses Time-of-Flight 3D depth sensors in conjunction with computer vision, signal processing, and machine learning. Smart phones will also start having more gesture commands integrated into apps.

We have a number of drones on the market which have a Gesture mode which is useful for taking drone selfies. The DJI Mavic Pro and Phantom 4 Pro use gesture mode.

The DJI Mavic Air uses advanced gesture and face recognition for flying and filming. You can read and watch videos in this DJI Mavic Air review here.

DJI don’t give too much detail on the technology behind their gesture mode. Many of their autonomous flight modes and collision avoidance systems, combine Vision and Ultrasonic sensors along with sophisticated algorithms.

Overall, we will see more drones coming to the market with gesture modes and new autonomous flight modes with ToF playing its part.

Flash Lidar Time-of-Flight Cameras In Automotive

The automotive industry is developing multiple systems, including some excellent safety systems using flash lidar Time-Of-Flight sensors. This is why I believe the UAV sector will follow and start investing much more time and resources into Time-of-Flight technology. Here is some of the innovations which are coming through in the auto industry using ToF technology;

- Driver state monitoring like head position detection and drowsiness recognition

- Passenger classification used for i.e. autonomous setting of pre-defined passenger preferences, optimized head-up display visualization and optimal adjusted airbag deployment force

- Touch-less gesture control of e.g. infotainment, navigation and HVAC systems

- Surround view for sophisticated parking assist and obstacle detection

For example, the MLX75123 Tof camera sensor is a fully integrated companion chip for Melexis. It’s perfectly suited for automotive and non-automotive applications, including, but not limited to, gesture recognition, driver monitoring, skeleton tracking, people or obstacle detection and traffic monitoring.

Here is a terrific video of the latest Melexis ToF flash lidar camera which will used to improve safety in the automotive sector.

Drones With Collision Avoidance Systems

In 2016, we first began to see small consumer and business drones coming on the market which can detect obstacles. Depending on the drone, its sensing technology and software programming, the drone could either take an action to stop and hover when it detects an object or can navigate around the object.

Now, one of the numerous features of Time-of-Flight flash lidar cameras is for obstacle and collision avoidance. However, while researching some of the latest drones on the market, I can find 3 drones, which use ToF for obstacle avoidance. One of these drones only use a ToF camera to detect objects above the drone. Another drone is using a ToF camera in conjunction with other sensors to avoid obstacles.

As this is quite new technology and with more development, I believe we will see more drones using ToF flash lidar camera sensors for obstacle detection in the future.

Here is a quick look at drones which have autonomous collision avoidance systems and the type of sensors they use.

DJI Matrice M200 – This commercial drone from DJI and has various uses including all types of inspection (power line, bridge, cellphone towers). It is very adaptable and can carry the Zenmuse X4S, X5S, Z30 and XT cameras. It can also carry a camera on top of the quadcopter.

For flight autonomy, the DJI Matrice M200 combines various sensors for obstacle detection and collision avoidance. An upward facing Time-of-Flight laser sensor camera recognizes objects above the M200. The Matrice 200 uses stereo vision sensors detect objects below and in front of the M200.

Walkera Vitus – This new mini fold up consumer drone from Walkera just released in June 2017 has plenty of terrific new technology. It has 3 directions (front, left, right) of obstacle avoidance using infrared TOF sensor technology. Its 3 high precision sensors and smart obstacle avoidance system is able to detect obstacles 5 meters (15 foot) in the 3 directions.

This Wakera Vitus is a very nice introductory drone. It has excellent stabilization, a very nice camera and a number of intelligent flight modes for following such as Follow, Orbit and Waypoint fly.

AscTec FireFly – In the below video from 2015, Ascending Technologies (AscTec), demonstrates a revolutionary working collision avoidance system for drones. A TeraRanger One ToF sensor was used as reactive altimeter in fast changing natural light conditions. Ascending Technologies was bought by Intel in January 2016 and the FireFly drone is no longer available.

DJI Phantom 4 Pro – The highly advanced Phantom 4 Pro is equipped with an environment sensing system based on stereo vision sensors and infrared sensors. Three sets of dual vision sensors form a 6 camera navigation system that works constantly to calculate the relative speed and distance between the aircraft and an object.

Using this network of forward, rearward and downward vision sensors, the Phantom 4 Pro is able to hover precisely in places without GPS when taking off indoors, or on balconies, or even when flying through windows with minimal pilot control.

It is able to detect obstacles 98 feet in front, allowing it to plan its flight path to avoid them or simply hover in the event of an emergency.

Combined with infrared sensors on its left and right sides, the Phantom 4 Pro can avoid obstacles in a total of four directions. Forward and rearward obstacle sensing allow the Phantom 4 Pro to fly at 31 miles per hour with full protection of its stereo vision obstacle sensing system.

However, we can see that DJI aren’t using ToF technology in the collision avoidance systems on the Phantom 4.

The Phantom 4 Pro is one of the best value drones on the market. It is widely used for all types of business and personal usage. It is extremely high tech and the Phantom 4 intelligent flight modes put this well ahead of similar priced drones.

Yuneec Typhoon H – This drone uses the Intel RealSense technology to avoid obstacles. RealSense integrates with Follow Me mode to avoid objects. It uses the Intel® RealSense™ R200 camera with an Intel atom powered module to build a 3D model of the world to stop the Typhoon H flying into obstacles.

This RealSense technology is capable of remembering its environment, further enhancing the prevention of possible collisions. It is not reactionary – if it avoids an obstacle once, it will remember the location of the obstacle and will automatically know to avoid it the next time.

The Intel RealSense camera has an IR laser projector that emits IR light into the scene of where it is going to fly. There is an IR camera that reads the emitted pattern. Based on the displacement of the pattern due to objects in the scene, it can calculate the distance of the objects from the camera. This method of calculating depth in general is know as structured light, and this is the way other 3D cameras, like the original Kinect work.

The R200 sensor uses a static IR “dot” pattern, similar to how the Kinect 1 works as well as passive IR stereoscopy. Therefore the Yuneec Typhoon H doesn’t use the ToF flash lidar technique to avoid obstacles.